Intelligent Agents

Organization

1. Agents and Environments

2. The Comcept of Rationality

3. The Nature of Environments

4. The Structure of Agents

Definition of Intelligent Agent

An intelligent agent is anything that

- perceives it einvironment throught sensors and

- acts upon the environment thruth actuators.

간단하게 intelligent agent 를 IA 라고 표현하며, 이는 우리가 알고 있는 궁극적 Artificial Intelligence 이므로 IA == AI 라고 보면 된다.

- Example: Termostat

- Example: Robotic Lawn Mower

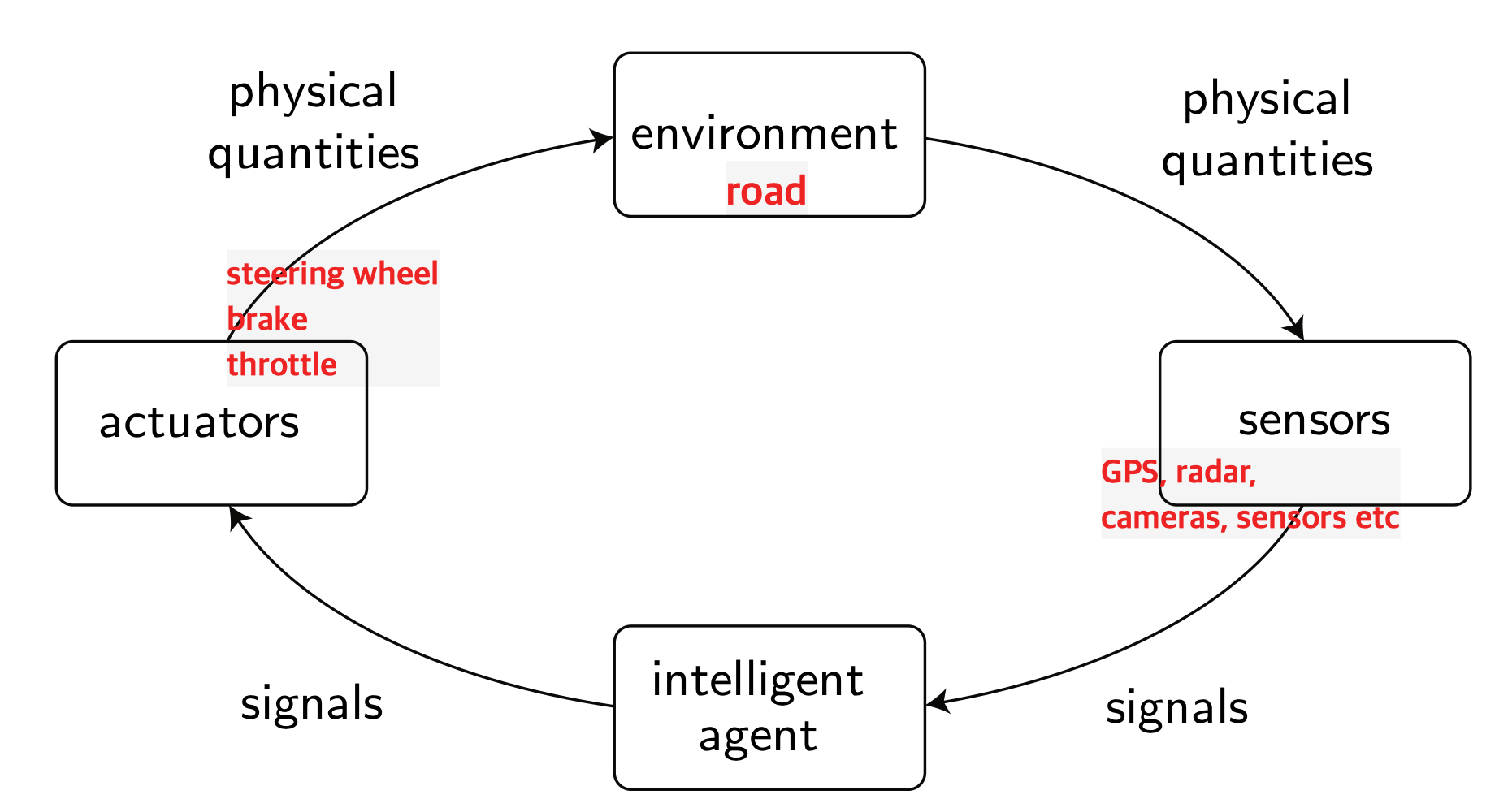

Example: Automated Car

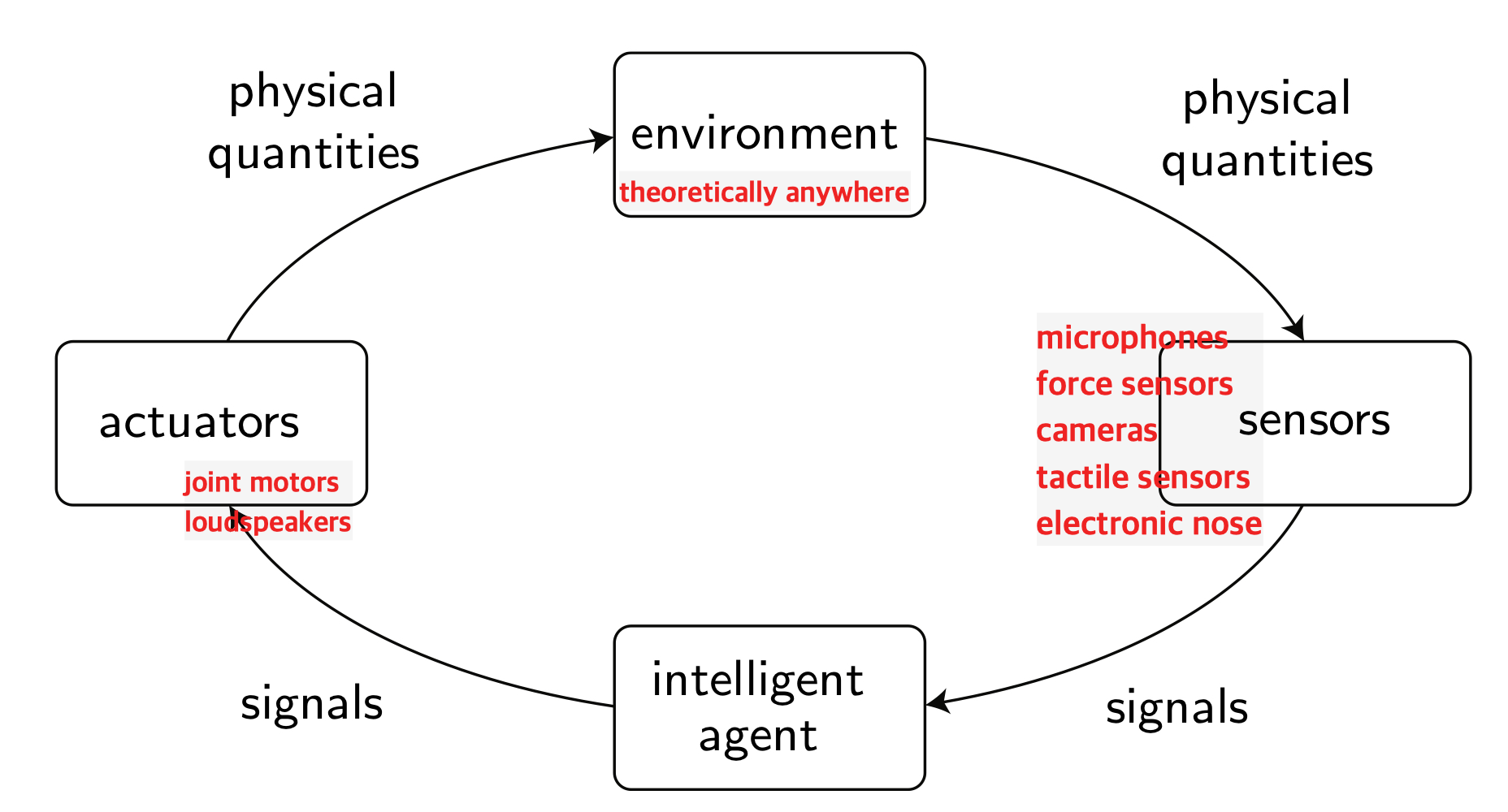

- Example: Humanoid

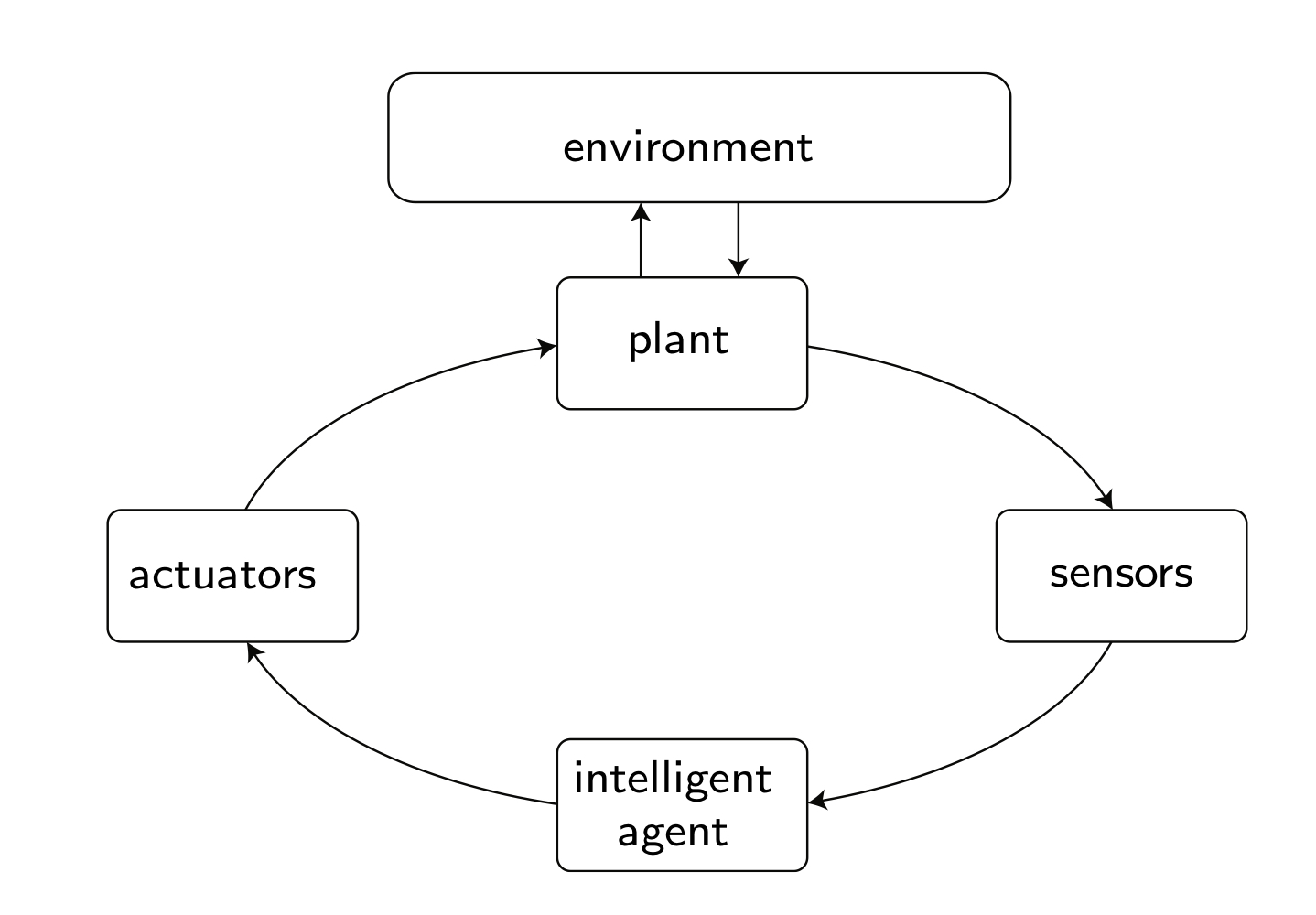

Diffenrence to a Control System View

In control theory, one typically distinguishes betweet the system one wants to control and the environment. In the AI setting, this distinction is ofter not made.

/ 제어 이론으로 넘어가면 제어하는 시스템과 제어를 받는 환경을 구별하지만 AI에서는 크게 중요치 않다.

Think about Vaccuum- Cleaner World

Condition

Percepts_자각, 인식 결과 : location and contents, e.g., [A, Dirty]

Actions: Left, Right, Suck, NoOperation

Percept sequence

An agent's percept sequence is the complete history of its peception.

Vacuum cleaner examle: [A, Dirty] , [A, Clean] , [B, Clean] , [A, Clean]

the behavior or an agent can be fully described by its agent function:

Agent function

An agent function maps any give npercept sequence to an action.

Depending on the length of the percept sequence, the agent can make smarter choices. WHY?

Tabular Agent Function

| percept sequence | Action |

| [A, Clean] | Right |

| [A, Dirty] | Suck |

| [B, Clean] | Left |

| [B, Dirty] | Suck |

| [A, Clean] , [A, Clean] | Right |

| [A, Clean] , [A, Dirty] | Suck |

| ... | ... |

An agent program reealizing this agent function:

function Reflex-Vacuum-Agent ( [location, status] ) returns an action

if status = Dirty then return Suck

else if location = A then return Right

else if location = B then return Left

Comments on Agent Functions

- Expressiveness_표현력 : Tabular agent functions can theoretically describe the behavior of agents.

- Practicality : Tabular fanctions have no practical use isnce they are infinite or very large when one only considers finite percept sequences.

Examples of table sizes:

1h recording of a camera (640x480 pixel, 24 bit color): \(10^{250,000,000,000} \)

Chess: \(10^{150} \)

- Solution : An agent program is a practical implementation of an agent function.

- What is a good agent function? anwered by the concept of rational agents.

Rational Agent

Rationality

A system is rational if it does the "right thing" , i.e. has an ideal performance.

- An obvious performance measure ist not always available.

- a designer has to find an acceptable measure.

Example vacuum cleaner:

- Option 1: Amount of dirt cleaned up in a certain amount of time.

Problem: an optimal solution could be to clean up a section, dump the dirt on it, clean it up agein, and so on.

- Option 2: Reward clean floors by providing points for each clean floor at each time step.

Then,

What is rational at any given time depends on

- the performance measure;

- the agent's prior knowledge of the environment;

- the actions that the agent can perform;

- the agents' percept sequence up to now;

Rational Agent

For each possible percept sequence, a rational agent should select an action that is expected to maximize its performance measure, given the prior percept sequence and its built-in knowledge.

/ 펄셉 시퀀스는 기존 배경지식과 우선 시퀀스가 주어지면 그 안에서 맥시마이즈 퍼포먼스 측정익 가능한것으로 예상된다.

/ 성능 측정치를 최대화 하는 행동을 선택하는 것

Omniscience, Learning, and Autonomy

Omniscient agent _ 전지적 행동

An omniscient agent knows the actual outcome of its actions, which is impossible in reality.

/ 현실에서는 불가능한 행동의 실제 아웃컴을 알 수 있다.

Example: Just imagine you know the outcomeof betting money on semething. A rational agent maximizes expected perfomance.

Learning

Rational agents are able to learn from perception, i.e., they improve their knowledge of the enviroment over time.

Autonomy

In AI, a rataional agent is considered more autonomous if it is less dependent on prior knowledge and uses newly learned abilities instead.

/ AI에서 합리적 인공지능의 행동은 이전 지식에 덜 의존하고 대신 새로 학습한 능력을 사용하는 경우 더 자율적인 것으로 간주한다.

Task Environment

PEAS description

Performance measure / Environment / Actuators / Sensors

Internet shopping agent

Performance Measure Environment Actuators Sensors price, websites displaty to user HTML pages quality vendors follow URL appropriateness shippers fill in form efficiency

Automated taxi

Performance Measure Environment Actuators Sensors safety street steering video time freeways accelerator radar profits, traffic brake GPS ... weather horn accelerometers

Properties of Task Environments

Fully observable vs. partially observable

An environment is fully observable if the agent can detect the complete state of the environment, and partially observable otherwise.

Example: the Vacuum-cleaner world is partically observable since the robot only knows whether the current square is dirty.

Single agent vs. multi agent

An environment is a multi agent environment if it contains several agents, and a single agent environment otherwise.

Example: The Vacuum-clenaer world is single agent environment. A chess game is a two-agen environment.

Deterministeic vs. stohastic

An environment ist deterministic if its next state if fully determined by its current state and the action of the agent, and stochastic otherwise.

Example: The automated taxi driver environment is stochastic since the behavior of ther traffic participants is unpredictabel. The outcome of a calculator is deterministic.

Episodic vs. sequantial

An environment is episodic of the actions take in one episode ( in which the robot senses and acts) does not affect later episodes, and sequential othewise.

Exmpale: Deteckting defective parts on a aconveyor belt is episodic. Chess and automated taxi driving ar sequential.

Discrete vs. continous

The discrete/continous distinction applies to the state and the time:

continous state + continous time: e.g., robot

continous state + discrete time: e.g., weacher station

discrete state + continous time: e.g., traffic light control

discrete state + discrete time: e.g., chess

static vs. dynamic

if an environment only changes based on actions of the agent, it is static, and dynamic otherwise. Exmaple: The automated taxi driver environment is dynamic. A crossword puzzle is static.

known vs. unknown

An einvironment is known if the agen knows the outcomes (or outcome problbilities) of its actions, and unknown otherwise. In the latter case, the agent hasa to learn the environment first.

Example: The agenat knows all the rules of a card game it should play, thus it is in a know environment.

Agent Types

Besides categorizing the task environment, we also categorize agents in 4 categories with increasing generality:

- simpe reflex agents

- reflex angets with state

- goal-based agents

- utility-based agents

Simple Reflex Agents

- Agent selects action on the basis of the current percept.

- The vacuum-cleaner program is the one of a simple reflex agent.

- Typically only works in fully observable environments and when all required condition-action rules are implemented

가장 기본적인 타입이다. 센서로 환경을 인식한 후 미션에 따라 행동을 한다.

Vacuum-cleaner : 센서로 방이 더러운 지 인식하고 suck한다.

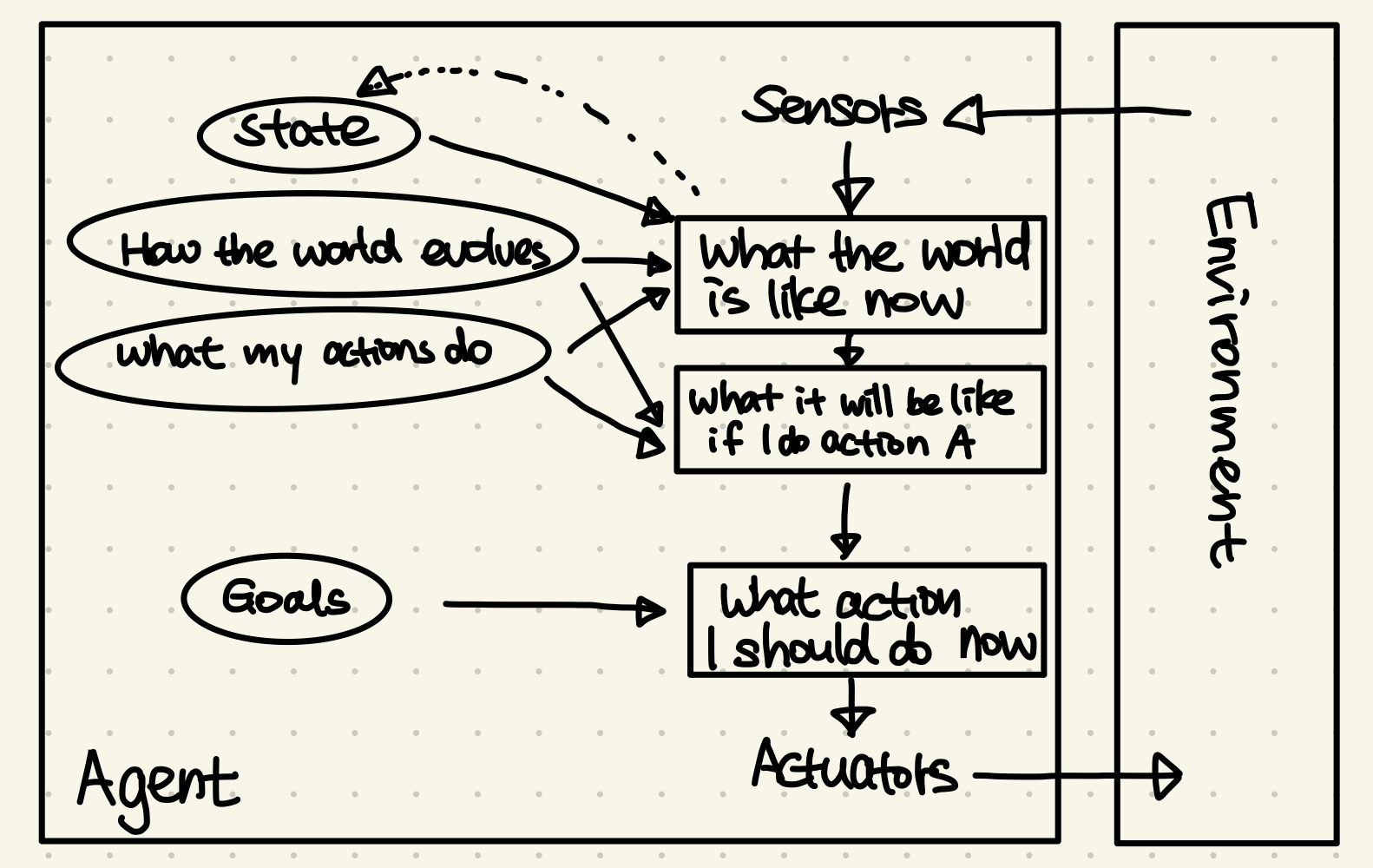

Model-Based Reflex Agents

- Extension of the simple reflex agent.

- Partial observability is handled by keeping track of the environment the agent cannot perceive.

/ 과거의 경험을 베이스로 올려 더 올바른 판단을 한다.

New components compared to simple reflex agents:

Internal state

The agent keeps an internal state of the previous situation that depends on the percept history and therby reflects unobservable aspects. "good" internal states

How the world evolves

Example of pedestrial becoming unobservable (Pedestial Example)

운전중 -> 차량 뒷편에 보이지 않는 보행자가 있을 수도 있음 -> 보행자를 주의해서 운전한다.

Goal-Based Agents

- Extension of the model-based reflex agents.

- In addition to knowing the current state of the environment, the goal of the agent is explicitly considered.

WHAT IT WILL BE LIKE IF I DO ACTION A ?

Pedestiran Example

outcome 1. braking will prevent the vehicle from hitting the pedestrian.

outcome 2. continuing with the same velocity will hit the pedestrian.

finial decision: made by checking which actions help achieving the goal. The system will brake to achieve the goal aof arriving accident-free at the destination.

Reaching a goal is achieved by search and planning.

/ 목적/목표지향적이다. 목표를 가장 우선시 한다.

Utility-Based Agents

- Extension of the goal-based reflex agents.

- In addition to achieving the goal state, it should be reached with maximum utility, i.e., maximizing the "happiness" of the agent.

New aspect of utility-based agents:

HOW HAPPY WILL I BE IN A STATE?

Pedestian Example

modification - pedestrial has not yet stepped in the street.

1. The utility is to arrive at the destination on time, while keeping the risk of an accident low.

2. Braking will decrease the risk of hitting the pedestrian.

3. Continuing with the same speed hels in getting to the destination on time , but increases the risk of an accident.

4. The final decison is made by evaluation the ytility function: The outcome will be a tradeoff between arriving on ttime and safety.

Maximizing the expected utility is especially challenging in stochastic or partially-observable environments.

Learning Agents

- Any previous agent can be extended to a learning agent.

- The block Perfoamance element(성능 측정치) is a placeholder for the whole of any of the preious agents.

/ 가장 완벽에 가까운 타입이고, 스스로 학습을 하며 계속 발전한다.

Performance element

Placeholder for any of the previous entire agents; responsible for selecting the actions

Learning element

Makes improvements based on gained experience

Critic

Tells the learning element how well the agent is doing with respect to a fixed performance standard

Problem generator

Suggests actions leading to new & informatie experiences to stimulate learning

Pedestian Example

1. After a near collision with a pedestrian, the critic tells the learning element that the minimum distance was too small.

2. The learning agent adapts the rule of when to brake close to a pedestrian.

3. The problem generator suggests changing lanes when close to pedestirans.

4. The critic will tell the learning agent that changing lanes is an effective method and the learning agent adapts the performance element accordingly.

Summary

- Agents interact with environments through actuators and sensors

- The agent function describes what the agent does in all circumstances.

- The performance measure evaluates the environment sequence.

- A perfectly rational agent maximize expected performance.

- Agent programs implement (some) agent functions

- PEAS descriptions define task environments

- Environments are categorized along several demensions: obersevable? deterministic? episodic? static? discrete? single-agent?

- Several basic agent architectures exist: reflex, reflex with state, goal-based, utility-based

댓글